Stanford Researchers Unveil Study on Political Inclination Prediction Using AI

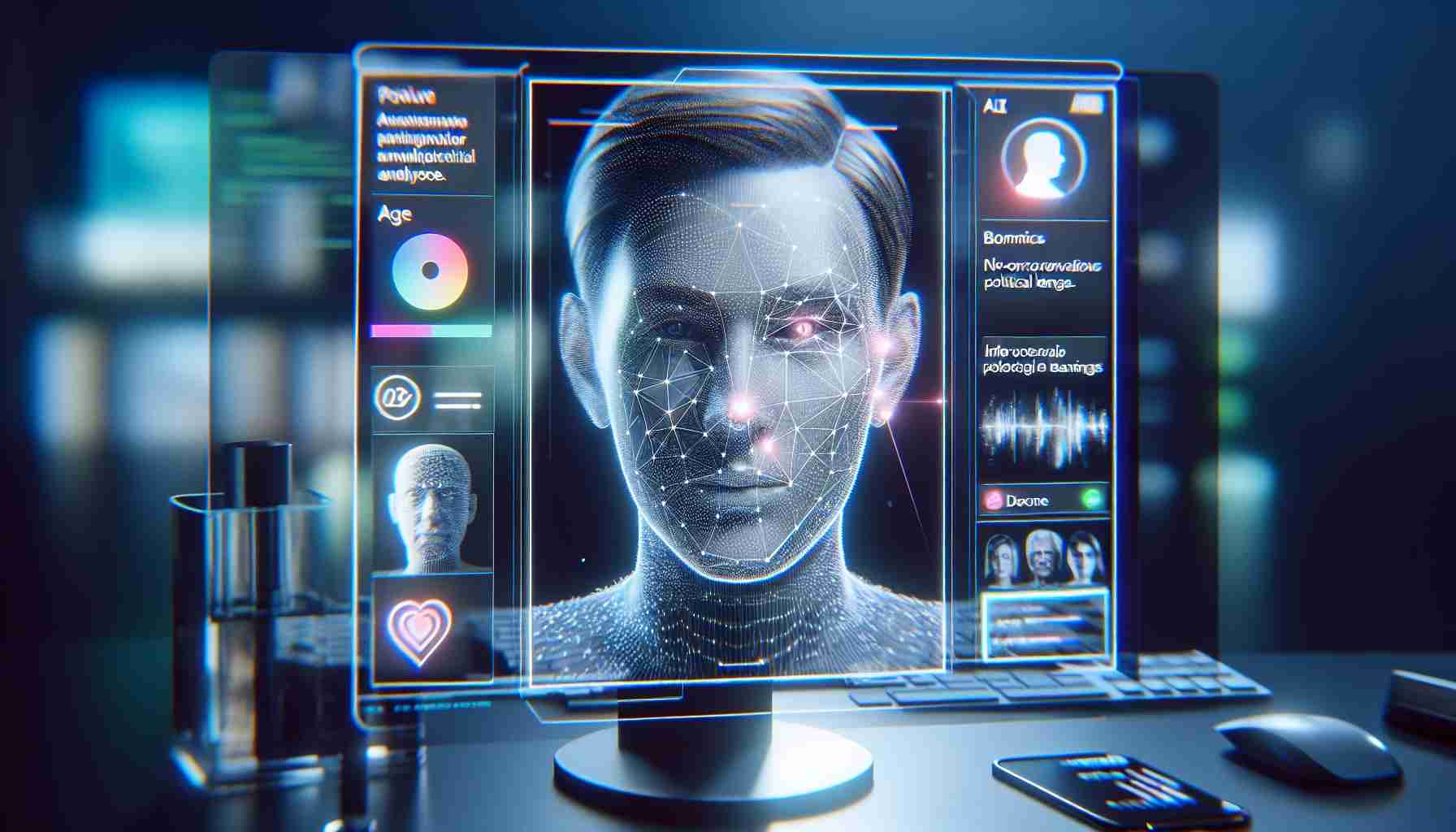

A recent study published in the “American Psychologist” journal indicates that a combination of facial recognition and artificial intelligence technologies is capable of accurately assessing an individual’s political leanings solely from their neutral facial expression.

The study was penned by researchers from Stanford University’s Graduate School of Business. They began the experiment by administering a political questionnaire to 591 participants, thus gaining insight into their political convictions. Those same participants were later examined by an artificial intelligence algorithm developed by the researchers, which aimed to determine their position on the political spectrum. The algorithm was found to be highly accurate in identifying an individual’s political orientation, even when age, gender, and ethnicity were not taken into account.

Improvement in Predictive Accuracy with Demographic Data

The predictive accuracy of the algorithm further heightened when it leveraged information regarding the participants’ age, gender, and ethnic backgrounds. Researchers described the accuracy levels using specific equations and comparisons, highlighting the prediction’s reliability being on par with how well job interviews foretell workplace success or how alcohol consumption can indicate aggression.

Liberal vs. Conservative Facial Features

The researchers observed variations in the average facial features of the most liberal and conservative men and women, incorporating this data into their analysis. They found that liberals often had a smaller lower face with downward-angled lips and nose, and a smaller jawline than conservatives.

The Intersection of Facial Features and Psychological Traits

Moreover, researchers posited that the appearance of one’s face might shape psychological traits. Social expectations linked to physical looks could influence personality development, suggesting that individuals with characteristics perceived as dominant (like a pronounced jaw, often associated with conservatism) might increasingly embody those traits over time.

Implications for Biometric Surveillance Technology

This alleged correlation between distinct facial morphology and political orientation enabled researchers to establish a database of faces associated with various political stances, testing the facial recognition algorithm’s accuracy in predicting corresponding political inclacies. They claim success, suggesting that both humans and algorithms can predict political orientation from neutral facial images. The study remarks on the potential implications of widespread biometric surveillance technologies as possibly being more invasive than previously thought, especially in contexts like targeted delivery of online political messages.

Concern for Privacy and Ethical Considerations

The research underscores the artificial intelligence’s ability to deduce an individual’s political orientation, a capability that holds significant value for targeted advertising and could raise concerns regarding privacy and freedom of expression in digital spaces.

Key Questions and Answers:

– Can AI accurately predict political leanings from facial analysis? Yes, according to the study by Stanford researchers, AI can accurately predict political leanings using facial recognition technology, even without specific demographic data.

– What role does facial morphology play in the study’s findings? The study notes that liberals and conservatives tend to have distinct facial features, and these differences were factored into the AI algorithm’s predictive analysis.

– What are the possible societal implications of this technology? This technology could have significant implications for privacy, potentially leading to more invasive biometric surveillance and targeted political advertising.

Key Challenges or Controversies:

One key challenge in this area is the ethical concern regarding the use of AI for analyzing political leanings from facial expressions. There is a risk of misuse and privacy invasion, as individuals may not consent to such data being collected or used for political analysis. Additionally, there might be inaccuracies in predicting political orientation due to the diversity within political groups and the evolution of individual beliefs over time. Some critics argue that the predictive technology could strengthen biases and discrimination, particularly if the technology becomes more widespread without adequate regulation.

Advantages:

– Enhanced capabilities for targeted advertising, potentially making political campaigns more efficient.

– Opportunities for academic inquiry into the intersection of physical appearance and psychological traits.

– Improvement in algorithms’ predictive power when demographic data is provided.

Disadvantages:

– Potential for privacy infringements if such technology is used without consent.

– Risk of misuse by authoritarian regimes for monitoring and controlling political dissent.

– Ethical concerns about the fundamental right to freedom of expression and association if individuals are profiled politically based on facial analysis.

– The possibility of perpetuating or exacerbating stereotypes through biased AI predictions.

Relevant information not mentioned in the article includes discussing how AI has been used in other contexts for predictive analytics, such as health diagnostics or consumer behavior. Additionally, mentioning general principles of machine learning, such as the need for large data sets and potential biases in algorithms, could provide a deeper understanding of how the AI in the study might function.

For more information, one might explore the main domains of reputable organizations that offer insights on AI ethics and privacy concerns:

– ACLU

– Electronic Frontier Foundation

– Stanford University

– Association for Computational Linguistics

Please verify that these URLs are correct and active before visiting them, as the landscape of online websites can change rapidly.