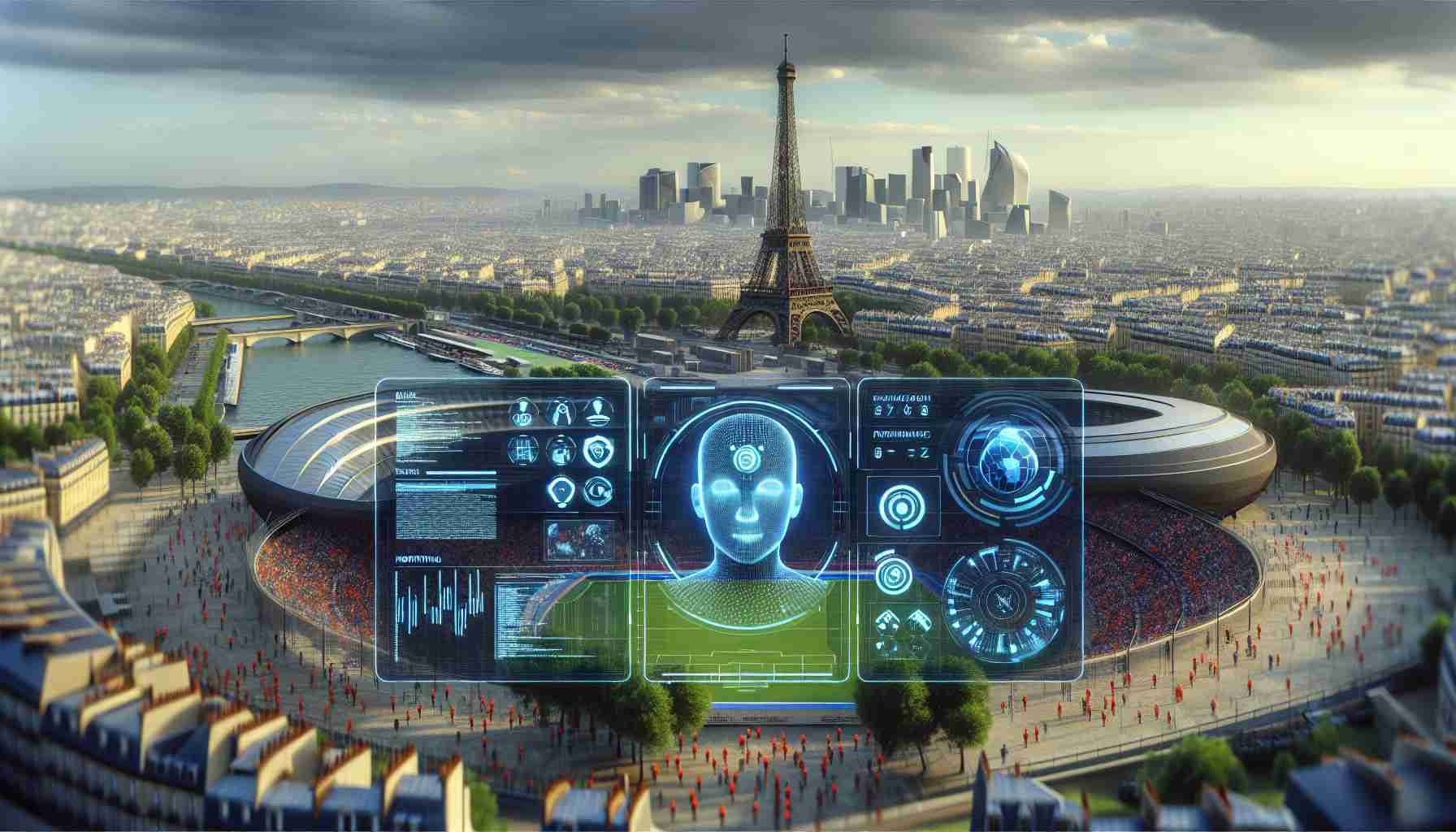

An advanced technology initiative will be deployed by the International Olympic Committee (IOC) with the goal of safeguarding athletes and officials from cyberbullying during the upcoming Olympics and Paralympics in Paris. The committee has detailed its plans to employ an artificial intelligence-driven monitoring system that promises a novel approach to online protection.

This cutting-edge AI solution is designed to scrutinize thousands of social media accounts across major platforms, interpreting content in over 30 languages. The system is poised to detect potential threats, enabling proactive responses to harmful messages. This means offensive posts can be addressed by the respective social media channels before they ever reach the eyes of the targeted individuals.

IOC’s Sport Safety Leader, aware of the implications cyberbullying can have on performance, assures athletes that they can remain focused on the competition while ensuring that everything else is handled efficiently and securely. This approach offers athletes peace of mind, knowing that any negativity from online sources will be managed swiftly, allowing them to concentrate on their athletic endeavors.

Revolutionizing athlete safety, the move to incorporate artificial intelligence in monitoring online activities is a first for the IOC. It reflects an expanding commitment to maintain a secure and positive online environment for the myriad of competitors engaging in diverse events at the Games. This is particularly significant, considering the simultaneous participation of numerous athletes, each potentially exposed to the global digital audience.

Key Questions & Answers:

Q1: What type of AI technology is being used to shield athletes from online abuse?

A1: The specifics of the AI technology have not been provided in the article, but it indicates that the system is capable of monitoring social media accounts across multiple platforms and interpreting content in a multitude of languages to detect potential threats and proactive responses.

Q2: How will this AI system improve safety for athletes?

A2: By detecting harmful messages and threats, the AI system aims to prevent online abuse from reaching athletes, thus protecting their mental well-being and allowing them to focus on their performance without the distraction of negative online interactions.

Key Challenges or Controversies:

– One challenge could be the accurate detection of negative content in a variety of languages and contexts without infringing on free speech.

– The AI system’s ability to adapt to new forms of online abuse and the evolving language of cyberbullies is critical.

– There may be privacy concerns about the monitoring of social media accounts and the potential overreach of the technology.

– Some may question the effectiveness of AI in distinguishing between genuine abuse and benign interactions, potentially leading to censorship controversies.

Advantages:

– Enhanced mental well-being for athletes by reducing exposure to cyberbullying.

– Allows athletes to concentrate on competition without the distractions of negative online content.

– Sets a precedent for other large-scale events to incorporate similar systems for participant safety.

Disadvantages:

– Potential for false positives where non-threatening messages are misidentified as abuse.

– Privacy issues regarding the monitoring of online content.

– Dependence on cooperation from social media platforms to take action based on the AI system’s detection.

For more information on the International Olympic Committee and their initiatives to protect athletes, you may visit their official website at International Olympic Committee. Please note that individual organizational responses and the technical details of their specific AI systems would not be publicly available for proprietary and security reasons.