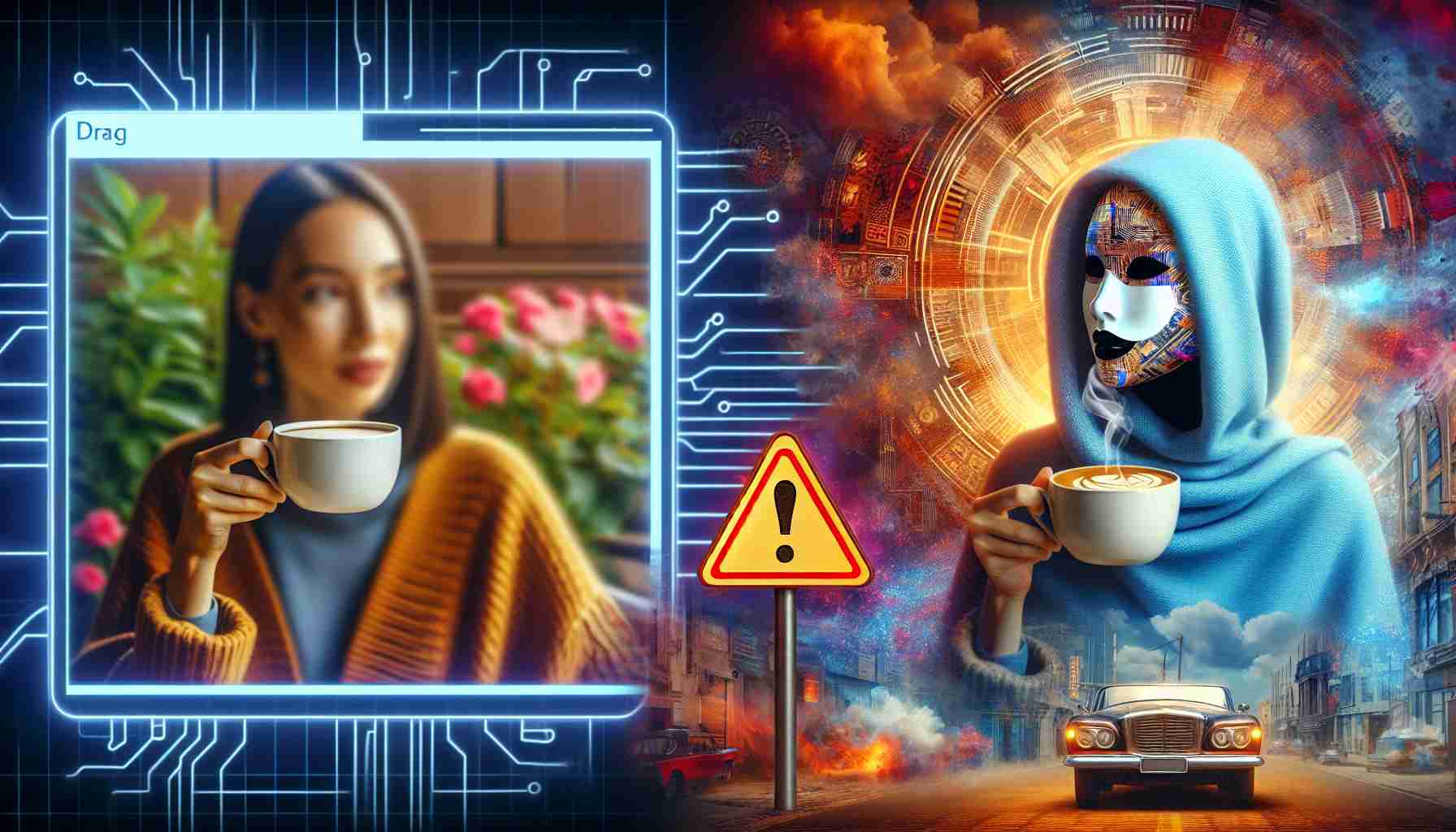

Deepfake technology, which manipulates images and videos with artificial intelligence to create false representations, is raising concerns as it can be utilized for creating artificial nudity from pictures of clothed individuals. Investigations by 404 Media, which specializes in sensitive subjects, have revealed that Meta, the parent company of popular social media platforms such as Facebook and Instagram, has multiple advertisements for these “Nudify” applications in its ad library.

These applications offer to produce fake nude images from photos of clothed persons through the use of AI, offering a troubling insight into the potential misuse of deepfake technologies. This practice is not only unethical by most societal standards but also poses a significant threat to individual privacy and could lead to public embarrassment or worse for those affected.

The implications of having such apps openly advertised and accessible through major social media channels are deeply concerning. It underscores the need for increased vigilance and potential regulatory measures to prevent the abuse of AI in creating and disseminating deepfake content without consent. The technology, while impressive, hence comes with a moral responsibility to safeguard individuals against cyber exploitation and privacy violations.

Risks and Challenges: The risks of deepfake technology in unconsented image alteration extend beyond creating false nudity. The technology can be used for producing realistic-looking fake videos and images that can undermine the credibility of public discourse and contribute to the spread of misinformation. Notable challenges include:

– Detection: As AI technology evolves, it is becoming increasingly difficult to distinguish between real and fake content. This presents a challenge for the platforms that host such content and for the individuals trying to spot fake images and videos.

– Legal and Ethical Concerns: There are considerable legal and ethical challenges around consent and the right to one’s likeness. Legislators are grappling with how to update privacy laws and criminal statutes to keep up with these technological advancements.

– Psychological Impact: For victims, the creation of deepfake content can lead to emotional distress, reputational harm, and in extreme cases, physical danger.

– Technological Misuse: While AI has the potential for significant positive impact, the misuse of deepfake technology can undermine trust in digital content, impacting politics, social relationships, and even national security.

Advantages and Disadvantages: Deepfake technology is a double-edged sword. It has advantages, such as:

– Creative Expression: Artists and filmmakers can use deepfakes for storytelling, special effects, and expanding creative horizons.

– Educational Content: It can generate educational materials and bring historical figures to life for better engagement in learning environments.

Yet it comes with considerable disadvantages:

– Privacy Violations: Creating images without consent infringes upon individual privacy rights and can be considered a form of digital harassment.

– Identity Theft: Deepfakes can lead to a new form of identity theft, where a person’s likeness is used without their permission, potentially for fraudulent activities.

Controversies: Deepfake technology itself is not inherently negative, but its applications can be. The misuse in creating non-consensual intimate images has been highly controversial. The debate continues on regulation versus free speech, the technical measures needed to combat malicious use, and who should be responsible for monitoring and policing deepfakes.

Related Links:

– For more information on AI and its impact, the general websites of major technology-focused organizations or research groups such as M.I.T. or Stanford University can offer relevant insights.

– Legal and privacy insights may be found at the main domain of institutions like the Electronic Frontier Foundation at EFF.

– The main domain of the Future of Life Institute at FLI often discusses broader ethical considerations of emerging technologies, including deepfake technology.

The utilization of deepfake technology in any form that violates consent and privacy presents a significant ethical and legal issue. It is imperative that society, technology platforms, and regulators work together to establish rules and systems that prevent the misuse of this powerful technology, while also recognizing and preserving its potential for positive contributions.