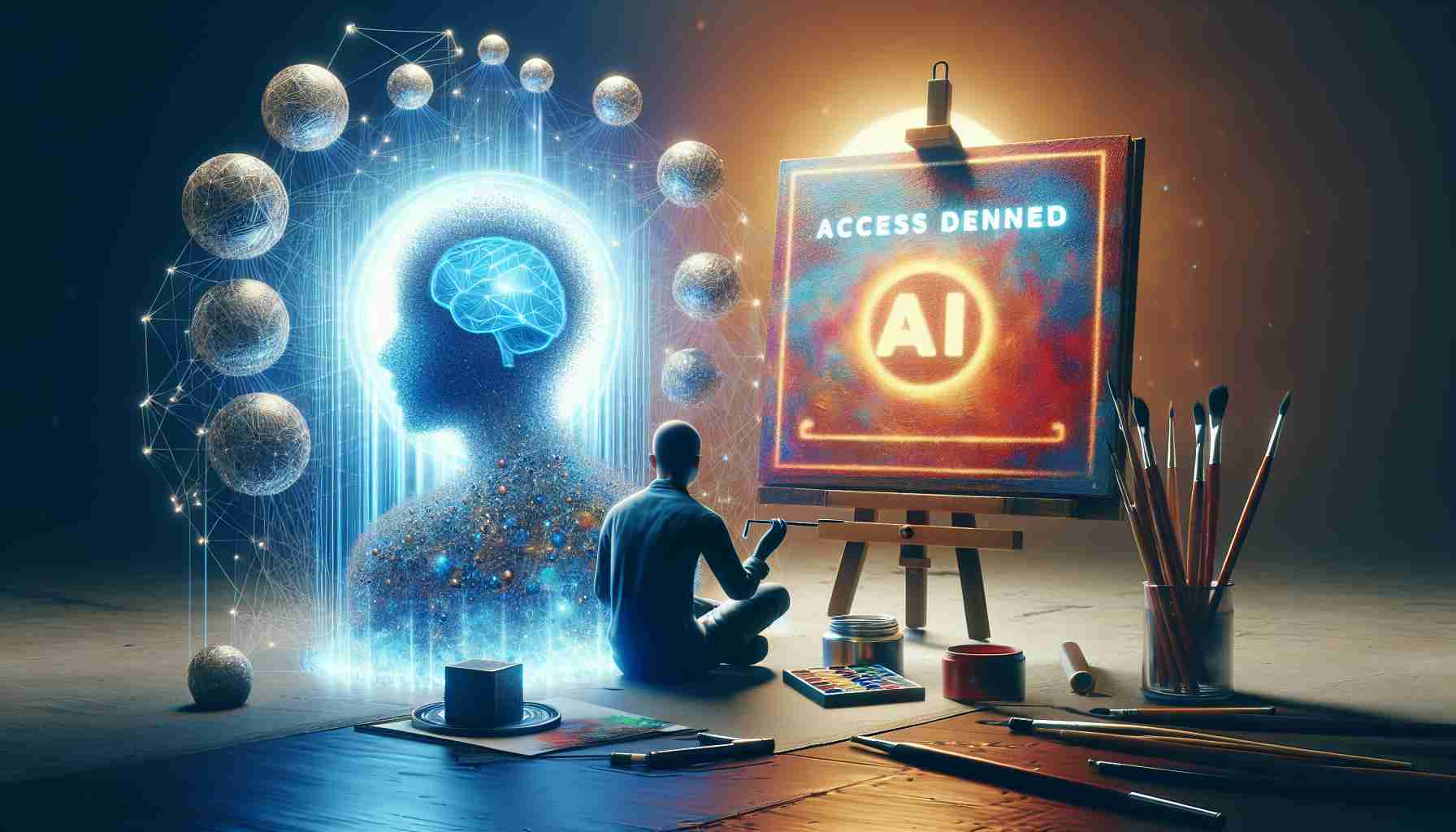

Artists around the world have been facing a growing concern as artificial intelligence (AI) tools generate images that mimic their unique styles. Mignon Zakuga, a book cover illustrator and video game artist, has witnessed potential clients opting for AI-generated images instead of commissioning her work. This phenomenon has led to worries about the future of young and emerging artists in the industry.

To combat this issue, researchers from the University of Chicago, Ben Zhao and Heather Zheng, have developed two new tools called Glaze and Nightshade. These tools aim to protect artists’ work by altering an image’s pixels in subtle, unnoticeable ways to humans but confusing to AI models. Similar to optical illusions, these modifications trick AI models into perceiving the image differently.

Glaze is designed to mask an artist’s unique style, preventing AI models from learning and replicating their work. By introducing “Glazed” images into training datasets, AI models become confused and produce mixed and inaccurate outputs. Nightshade, on the other hand, takes a more aggressive approach by sabotaging existing generative AI models. The tool turns potential training images into “poison” that teaches AI to associate fundamental ideas and images incorrectly.

These tools have provided some relief to artists like Zakuga, who regularly uses Glaze and Nightshade to protect her work. However, the tools have limitations. Cloaking an image can be time-consuming, and they can only protect new work. Once an image has been included in an AI training dataset, it is too late to cloak it. Additionally, there is no guarantee that these tools will remain effective in the long run. As AI models evolve, cloaking methods may become obsolete.

Despite the current limitations, these tools offer a temporary solution for artists looking to safeguard their work from AI copycats. Researchers like Zhao and Zheng continue to refine and improve these tools, striking a balance between disruption and maintaining the integrity of an image. As the cat-and-mouse game between artists and AI continues, the development of robust and future-proof protection tools remains a challenge.

Frequently Asked Questions (FAQ)

Q: What is the concern faced by artists in relation to artificial intelligence (AI)?

A: Artists are concerned as AI tools can generate images that replicate their unique styles, potentially leading to clients opting for AI-generated images instead of commissioning their work.

Q: What are Glaze and Nightshade?

A: Glaze and Nightshade are two tools developed by researchers from the University of Chicago, Ben Zhao and Heather Zheng. These tools aim to protect artists’ work from AI by altering image pixels in subtle ways that confuse AI models.

Q: How does Glaze protect an artist’s work?

A: Glaze masks an artist’s unique style and confuses AI models by introducing “Glazed” images into training datasets, resulting in mixed and inaccurate outputs.

Q: What is the purpose of Nightshade?

A: Nightshade takes a more aggressive approach by sabotaging existing generative AI models. It turns potential training images into “poison,” teaching AI models to associate fundamental ideas and images incorrectly.

Q: Do these tools completely protect an artist’s work?

A: No, these tools have limitations. Cloaking an image can be time-consuming, and they can only protect new work. Once an image is included in an AI training dataset, it is too late to cloak it. Additionally, the effectiveness of these tools may diminish as AI models evolve.

Q: What is the current state of these protection tools?

A: While these tools offer a temporary solution for artists, researchers like Zhao and Zheng continue to refine and improve them. Balancing disruption and maintaining image integrity is a challenge in the ongoing cat-and-mouse game between artists and AI.

Key Terms:

– Artificial Intelligence (AI): The simulation of human intelligence in computers or machines to perform tasks that typically require human intelligence.

– Glaze: A tool designed to mask an artist’s style, confusing AI models by introducing modified images into training datasets.

– Nightshade: A tool that sabotages existing generative AI models by turning potential training images into “poison,” leading to incorrect associations.

– AI Copycats: AI-generated images that replicate an artist’s unique style.

Related Links:

– University of Chicago website (Main domain link for more information on the researchers)

– Artificial Intelligence on Wikipedia (General information on AI)