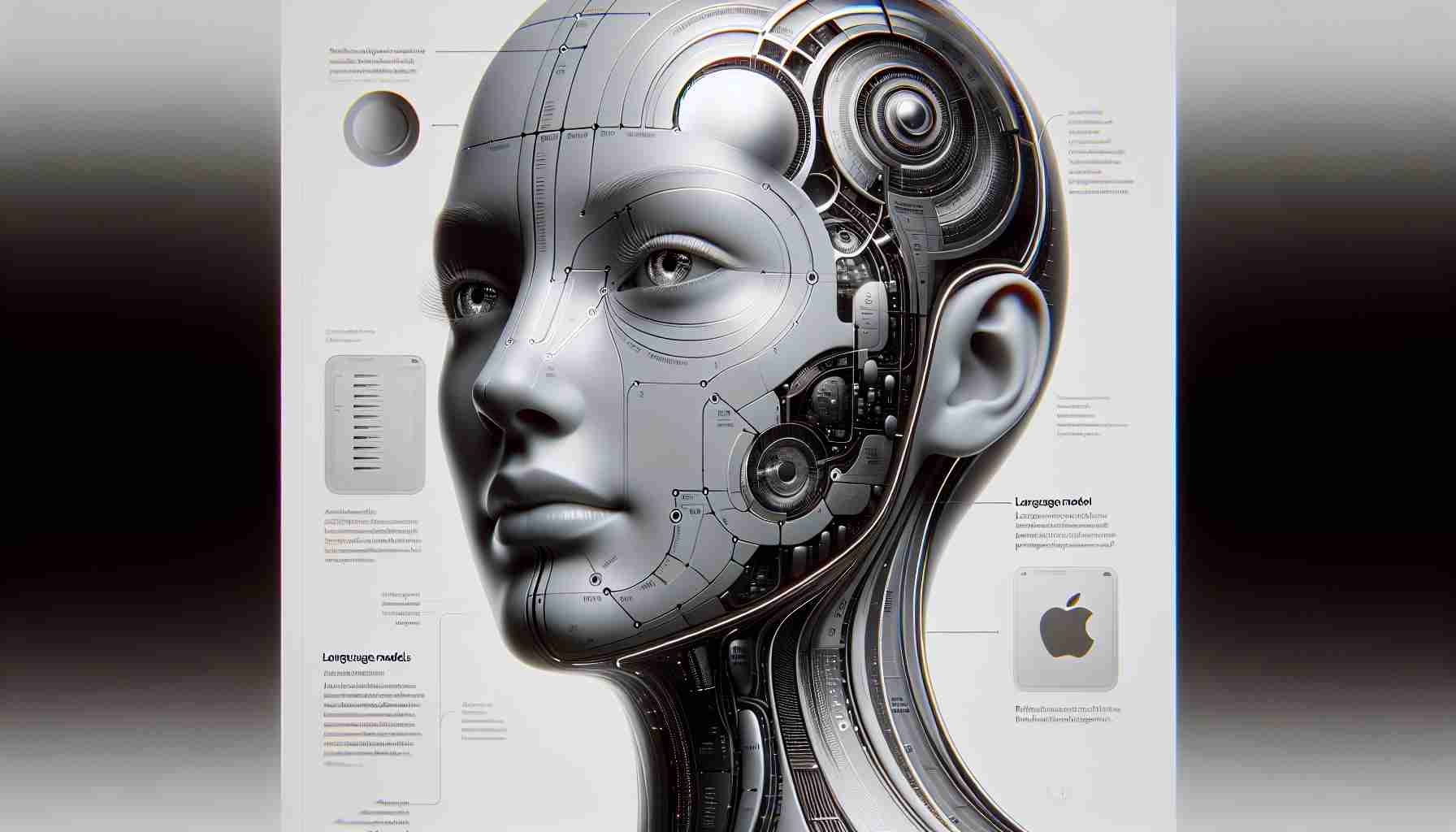

Artificial intelligence (AI) has taken a significant leap forward with Apple’s unveiling of Ferret-UI, a revolutionary multimodal language model that surpasses traditional text-based comprehension. This cutting-edge model showcases an in-depth understanding of multimodal elements such as images and audio, marking a notable advancement in the field of AI.

Ferret-UI is meticulously crafted to decipher and interpret mobile user interface (UI) screens, going as far as identifying app icons and small text with unparalleled precision. A core obstacle in developing multimodal language models has been accurately pinpointing these minute UI elements. Apple’s solution integrates “any resolution” capabilities into Ferret-UI, enabling it to magnify screen details effectively, thereby enhancing its visual recognition capabilities.

Additionally, Ferret-UI flaunts sophisticated functionalities like referring, grounding, and reasoning, empowering the model to comprehensively grasp UI screens and execute tasks based on their contents. In a series of benchmark tests, Ferret-UI outclassed GPT-4V, OpenAI’s multimodal language model, in various elementary assignments including icon recognition, OCR, widget classification, find icon, and find widget on both iPhone and Android platforms. Although GPT-4V showed a marginal edge in grounding conversations on UI findings, Ferret-UI’s innovative use of raw coordinates instead of pre-defined boxes positions it as a compelling alternative.

While Apple has yet to disclose specific applications for Ferret-UI, researchers underscore its potential to significantly enhance UI-related functions and amplify the capabilities of voice assistants like Siri. By comprehensively understanding a user’s app screen and executing tasks based on that knowledge, Ferret-UI could empower Siri to fulfill complex instructions autonomously, obviating the need for detailed step-by-step guidance. This advancement mirrors the evolving landscape of AI assistants, where users increasingly demand autonomous task completion—a trend exemplified by devices like the Rabbit R1, showcasing AI’s potential to streamline user experiences.

As Ferret-UI progresses, it holds immense promise for reshaping UI applications and enriching voice assistant functionalities. Apple’s strides in AI cement its status as an industry trailblazer, propelling innovation and expanding boundaries. The advent of multimodal language models like Ferret-UI underscores AI’s transformative capacity across various sectors, heralding a new era in user technology interaction.

—

Sıkça Sorulan Sorular (SSS)

The source of the article is from the blog japan-pc.jp