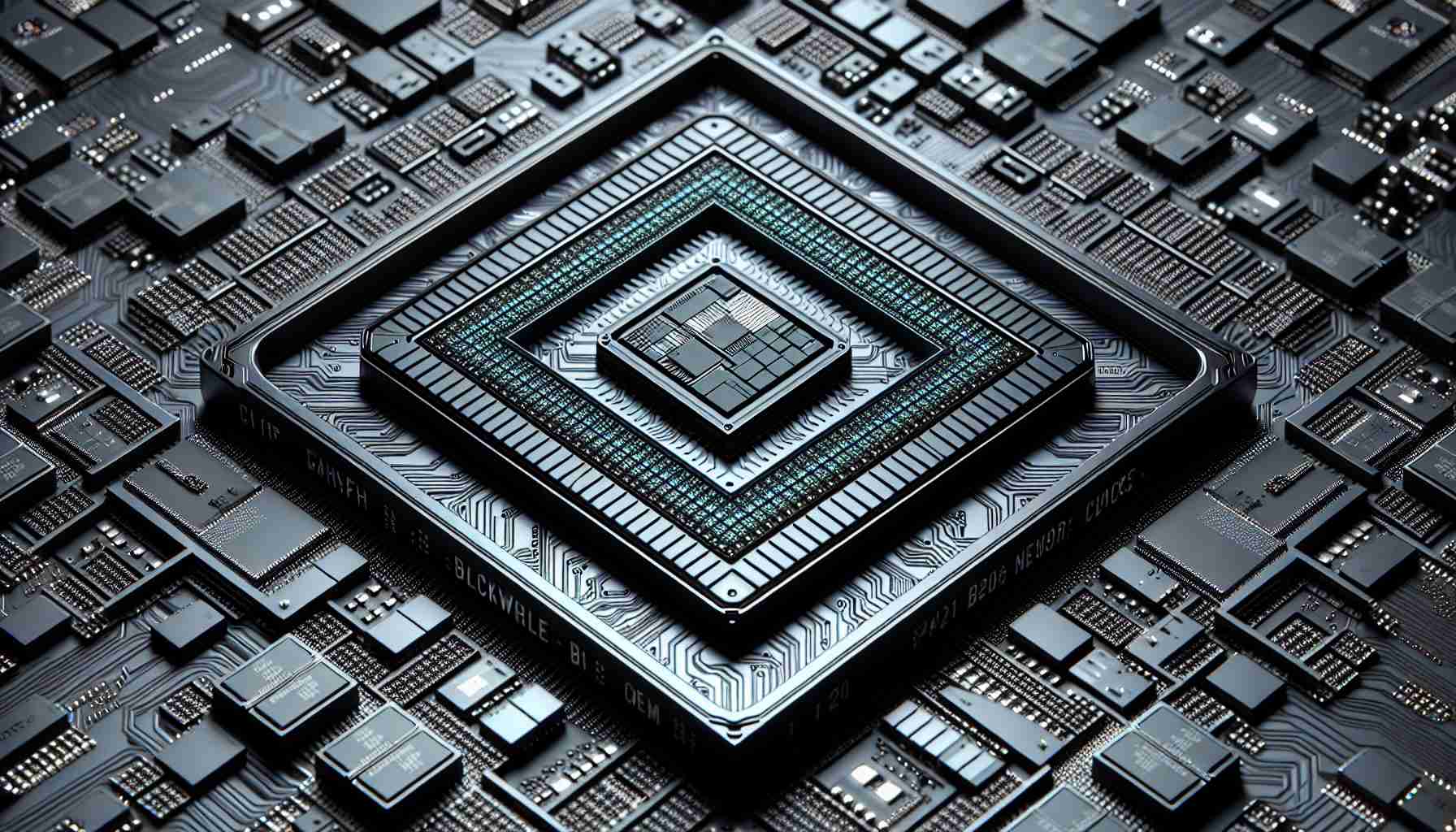

Nvidia recently shook up the tech industry with the release of its Blackwell B200 tensor core chip, a groundbreaking advancement in AI computing. Boasting an impressive 208 billion transistors, this single-chip GPU is positioned as a game-changer in reducing AI inference operating costs and energy consumption. The Blackwell B200 is set to pave the way for a new era of AI applications with its unparalleled performance capabilities, challenging the status quo and setting new benchmarks in AI computing efficiency.

An exciting addition to Nvidia’s portfolio is the GB200 “superchip,” a cutting-edge innovation that combines two B200 chips and a Grace CPU to deliver enhanced performance levels. Designed for advanced AI tasks, the GB200 is poised to revolutionize AI development and research, offering unmatched computational power and efficiency. The fusion of B200 chips and Grace CPU represents a significant leap forward in AI technology, opening doors to explore complex AI models and applications previously deemed unattainable.

The industry response to Nvidia’s Blackwell platform has been overwhelmingly positive, with major players such as Amazon Web Services, Dell Technologies, Google, Meta, Microsoft, OpenAI, Oracle, Tesla, and xAI expressing keen interest in adopting this innovative technology. The platform’s promise of driving cost savings, energy efficiency, and performance enhancements has captured the attention of tech leaders, further solidifying Nvidia’s position as a trailblazer in AI computing.

Nvidia’s success in the AI market can be attributed to the inherent strengths of GPUs, particularly their massively parallel architecture that accelerates matrix operations crucial for neural networks. Originally developed for gaming, GPUs have seamlessly transitioned into the realm of AI computing, showcasing their adaptability and prowess in handling complex tasks. Nvidia’s strategic focus on data center technologies has propelled its financial success, underscoring the transformative impact of AI computing on the tech landscape.

The Grace Blackwell GB200 chip, a cornerstone of the NVIDIA GB200 NVL72 data center system, represents a significant step forward in AI training and inference capabilities. With its exceptional performance gains compared to previous GPU models, the GB200 chip is poised to drive advancements in AI research and application development. The NVL72 system, powered by 36 GB200s interconnected through fifth-generation NVLink, offers unparalleled performance benefits for LLM inference workloads, signaling a new chapter in AI computing efficiency.

While Nvidia’s Blackwell platform holds immense promise for the future of AI computing, the true test lies in its widespread adoption and utilization by organizations across industries. As competitors like Intel and AMD actively vie for market share in the AI space, Nvidia faces a dynamic landscape characterized by innovation and competition. The upcoming availability of Blackwell-based products later this year heralds a new chapter in AI computing, offering a glimpse into the transformative potential of cutting-edge technology.

—

**FAQ**

1. **What is the Blackwell B200 tensor core chip?**

– The Blackwell B200 tensor core chip is Nvidia’s most powerful single-chip GPU, featuring an impressive 208 billion transistors. It is designed to reduce AI inference operating costs and energy consumption significantly.

2. **What is the purpose of the GB200 “superchip”?**

– The GB200 “superchip” combines two B200 chips and a Grace CPU to enhance performance further. It is intended for advanced AI applications and tasks.

3. **What organizations are expected to adopt the Blackwell platform?**

– Major organizations such as Amazon Web Services, Dell Technologies, Google, Meta, Microsoft, OpenAI, Oracle, Tesla, and xAI are expected to adopt the Blackwell platform for their AI-based operations.

4. **How do GPUs contribute to AI tasks?**

– GPUs are well-suited for AI tasks due to their massively parallel architecture, which accelerates matrix multiplication tasks necessary for running neural networks efficiently.

5. **What is the significance of the Grace Blackwell GB200 chip?**

– The Grace Blackwell GB200 chip is a key component of the NVIDIA GB200 NVL72 data center computer system. It enables enhanced AI training and inference tasks and offers substantial performance improvements compared to previous GPU models.

6. **Can the Blackwell platform lead to the creation of more complex AI models?**

– Yes, the Blackwell platform’s increased performance and enhanced capabilities can enable the development of more complex AI models. This is particularly beneficial for computationally hungry generative AI models that require significant compute power.

7. **What are the potential limitations and competition faced by Nvidia in the AI market?**

– While Nvidia has made significant claims about the Blackwell platform, its real-world performance and adoption by organizations remain to be seen. Competitors like Intel and AMD are also actively pursuing advancements in AI technology, creating competition in the market.

The source of the article is from the blog tvbzorg.com