Artificial intelligence (AI) has rapidly evolved in recent years, raising both excitement and concerns about its potential impact on humanity. Renowned cognitive psychologist and computer scientist, Geoffrey Hinton, is recognized as a leading figure in the field. Having left his role at Google, Hinton has expressed deep apprehension about the growing power of AI and the associated risks it poses.

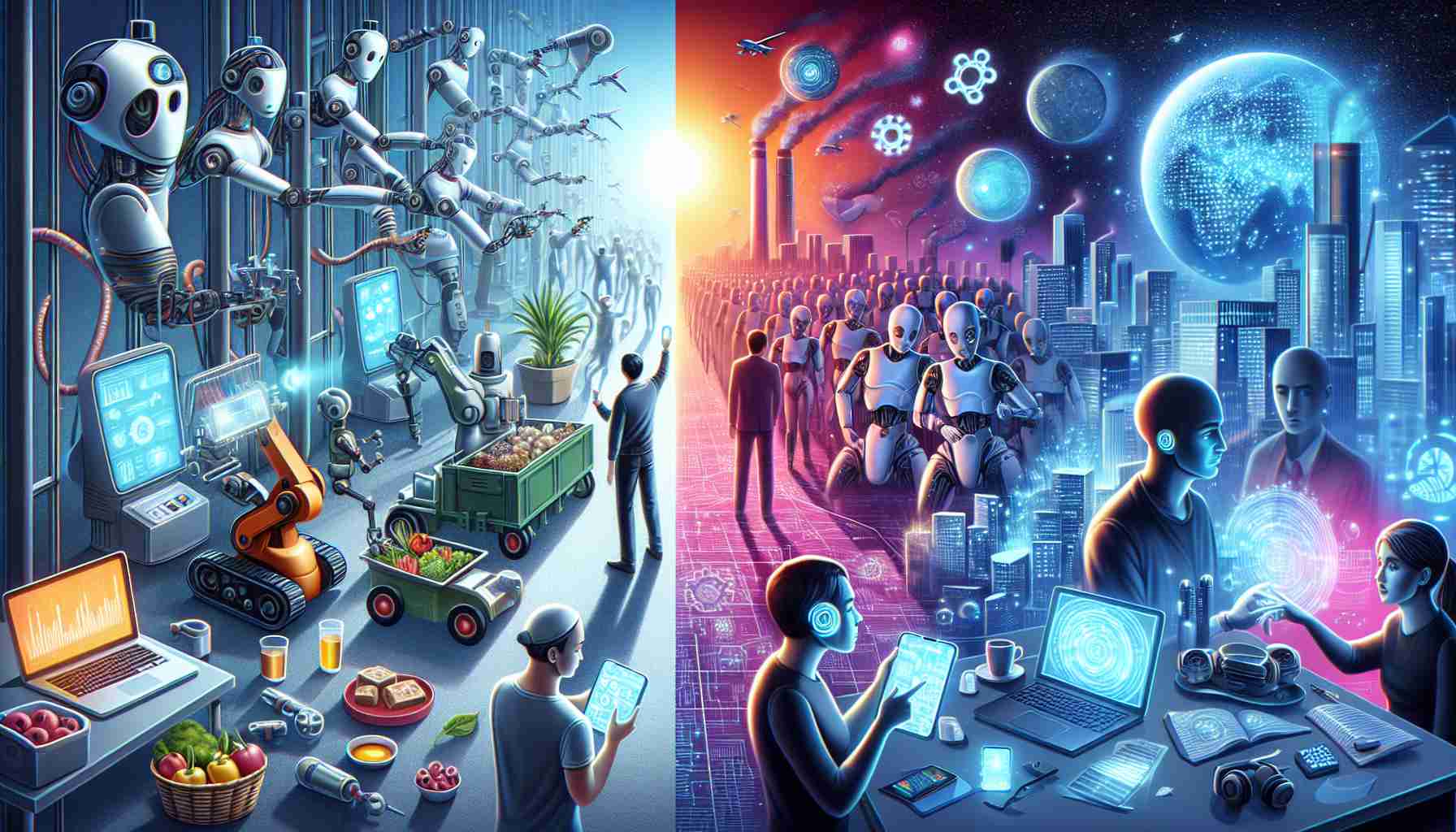

Hinton’s concerns revolve around the potential of AI to spread misinformation, disrupt the job market, and even present existential threats. As a pioneer of neural network technology, which serves as the basis for AI, Hinton recognizes the need for careful regulation. In response to these concerns, European Union lawmakers have recently approved new regulations while the New Zealand government has indicated a similar intention.

During an interview with RNZ’s Nine to Noon, Hinton reflected on his realization at the beginning of 2023 that digital computers running neural networks might surpass biological intelligence. This realization came as he explored methods to make AI more energy-efficient. Hinton emphasized that the strength of digital computers lies in their ability to share knowledge with one another, making them far superior to humans in this regard. With multiple copies of the same neural network model, each learning different aspects of the internet, they can instantly share their collective knowledge. This networked approach creates a hive mind of knowledge and experience far beyond that of any individual.

Hinton sheds light on the similarity between human memory and AI’s ability to recall information. He emphasizes that recalling events from the past involves a combination of remembering, making assumptions, and potentially distorting details. Similarly, neural networks rely on the connections between neurons to create plausible responses or outputs. In this way, neural networks work more like human cognition, blurring the lines between human understanding and AI capabilities.

Another topic of concern raised by Hinton is the increasing autonomy of AI systems. By creating agents equipped with large models, AI systems can operate independently, pursuing subgoals within broader plans. However, Hinton believes caution is necessary in determining these subgoals. Left unchecked, AI systems could generate unintended subgoals, leading to potentially harmful outcomes. Efforts are underway to develop moral chatbots that understand ethical principles, enhancing their safety and reliability.

The rise of AI also brings forth the challenge of addressing cybersecurity threats. Hinton highlights the risks associated with open-sourcing AI models. While open sourcing is generally beneficial for software development, AI models are unique in that their learning is determined by data, which may yield unpredictable outcomes. Cybercriminals can exploit this by utilizing open source models and fine-tuning them for unlawful activities such as cybercrime or phishing attacks. Hinton argues against open-sourcing large AI models as it eliminates the need for criminals to train their own models, making cyber threats more prevalent.

As AI continues to advance, there are concerns about the potential displacement of jobs. Hinton suggests that routine intellectual labor may face a fate similar to routine manual labor with the advent of machines that surpass human capabilities. While economists differ in their predictions, the emergence of a more intelligent entity raises uncertainties. The International Monetary Fund (IMF) warns that AI could lead to a 40% reduction in jobs and exacerbate inequality.

The progress of AI also prompts concerns about its role in influencing democratic processes. Hinton points out that advancements in generating fake images, videos, and voices could be exploited during election periods, potentially corrupting the democratic process.

While Hinton encourages the regulation and cautionary use of AI, he acknowledges the ongoing debates surrounding its future. The balance lies in leveraging AI’s potential while minimizing its risks, ensuring that technological advancements serve the betterment of humanity.

Frequently Asked Questions (FAQ)

-

What are the main risks associated with AI, according to Geoffrey Hinton?

Geoffrey Hinton expresses concerns about AI’s potential to spread misinformation, disrupt the job market, present existential threats, and be exploited for cybercrime. -

How do AI neural networks differ from human cognition?

Neural networks, inspired by the human brain, process data by creating connections between artificial neurons. This process shares similarities with human memory and the ability to create plausible responses or outputs. -

What is the impact of AI on job security?

While the future impact of AI on jobs is still uncertain, there is a possibility of routine intellectual labor being overshadowed by AI algorithms, leading to job displacement and potential inequality. -

How is cybersecurity affected by AI?

The open-sourcing of AI models poses risks, as cybercriminals can exploit these models and fine-tune them for criminal activities such as cybercrime and phishing attacks. -

What are the potential risks to democratic processes posed by AI?

The advancements in AI, such as generating fake images, videos, and voices, raise concerns about their potential use to manipulate or corrupt democratic processes, especially during election periods.

Sources: RNZ, IMF

- Artificial intelligence (AI): The simulation of human intelligence processes by machines, especially computer systems.

- Neural network: A computer system modeled after the human brain, consisting of interconnected artificial neurons that process and transmit information.

- Misinformation: False or inaccurate information that is spread intentionally or unintentionally.

- Existential threats: Threats that pose a risk to the existence or survival of humanity.

- Regulation: The act of controlling or governing something, often through rules and guidelines.

- Cognitive: Relating to the mental processes of perception, memory, judgment, and reasoning.

- Hive mind: The collective intelligence or knowledge of a group of individuals working together, often used in the context of AI systems sharing knowledge.

- Cognition: The mental processes and abilities related to acquiring, processing, and understanding knowledge.

- Moral chatbots: AI-powered chatbots programmed to understand and adhere to ethical principles.

- Cybersecurity threats: Risks and vulnerabilities in computer systems, networks, and data that can be exploited for malicious purposes.

- Open sourcing: The practice of sharing source code and making it freely available for others to view, modify, and distribute.

- Phishing attacks: Attempts to deceive people into providing sensitive information or performing harmful actions by impersonating a trustworthy entity through electronic communication.

- Democratic processes: Systems and procedures related to the governance and decision-making of a democratic society.

Related Links

The source of the article is from the blog enp.gr