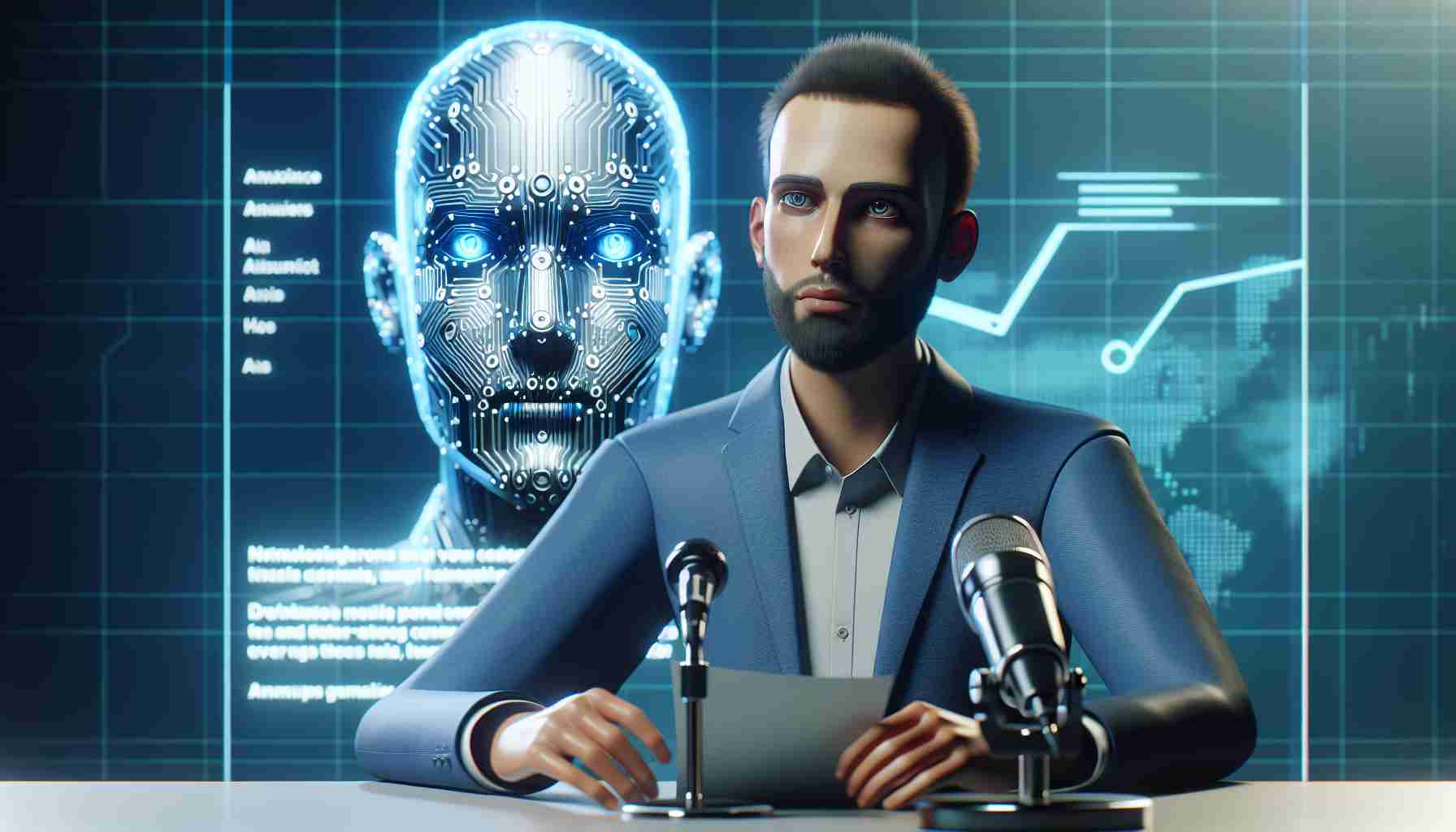

Meta, the parent company of Facebook, Instagram, and other popular social media platforms, has recently unveiled its plans to tackle the issue of AI-generated content, including deepfakes. With important elections looming in both India and the United States, policy-makers are seeking effective strategies to curb the spread of misleading information.

In an effort to combat this problem, Meta will introduce a new feature that allows users to disclose and label AI-generated video or audio content when shared on their platforms. By implementing this tool, Meta aims to provide users with more information and context about the content they encounter online.

Nick Clegg, President of Global Affairs at Meta, emphasized the importance of this disclosure and label tool, stating that failure to use it may result in penalties. Clegg also mentioned that if the company determines that certain digitally created or altered content poses a significant risk of deceiving the public, they will add a more prominent label to provide further clarity.

In addition to taking action within their own platforms, Meta is working closely with industry partners to establish common technical standards for identifying AI-generated content, including both video and audio formats. This collaborative effort aims to create consistent guidelines and practices across different platforms and organizations.

Furthermore, Meta has been utilizing invisible markers, such as IPTC metadata and invisible watermarks, to label photorealistic images created using their own AI technology. These markers align with best practices outlined by the Partnership on AI (PAI), a consortium dedicated to exploring the ethical implications of artificial intelligence.

As AI-generated content becomes increasingly prevalent, Meta recognizes the need for ongoing discussions and debates about the identification and authentication of synthetic and non-synthetic content. The company is committed to working with regulators and the industry at large to develop robust tools and strategies to address this evolving challenge.

While the spread of AI-generated content presents unique challenges, Meta’s proactive approach demonstrates a commitment to protecting the integrity and authenticity of digital content. Through collaboration and innovation, the company aims to enable a safer online environment for its billions of daily users.

FAQ Section

1. What is Meta’s plan to address AI-generated content?

Meta plans to introduce a new feature that allows users to disclose and label AI-generated video or audio content when shared on their platforms. This tool aims to provide users with more information and context about the content they encounter online.

2. Why is this disclosure and label tool important?

The disclosure and label tool is important because failure to use it may result in penalties. Additionally, if Meta determines that certain digitally created or altered content poses a significant risk of deceiving the public, they will add a more prominent label to provide further clarity.

3. How is Meta working with industry partners to tackle the issue?

Meta is working with industry partners to establish common technical standards for identifying AI-generated content, including video and audio formats. This collaborative effort aims to create consistent guidelines and practices across different platforms and organizations.

4. What markers does Meta use to label photorealistic images created with their AI technology?

Meta uses invisible markers, such as IPTC metadata and invisible watermarks, to label photorealistic images created using their own AI technology. These markers align with best practices outlined by the Partnership on AI (PAI).

5. How does Meta aim to address the evolving challenge of AI-generated content?

Meta is committed to ongoing discussions and debates about the identification and authentication of synthetic and non-synthetic content. They are working with regulators and the industry to develop robust tools and strategies to address this challenge.

Key Definitions

1. AI-generated content: Content that is created or altered using artificial intelligence technology, such as deepfakes.

2. Deepfakes: Synthetic media in which a person’s likeness is superimposed onto another person’s body, often used to create misleading or deceptive videos.

3. Disclosure and label tool: A feature introduced by Meta that allows users to disclose and label AI-generated video or audio content when shared on their platforms.

Related Links

– Meta

The source of the article is from the blog jomfruland.net