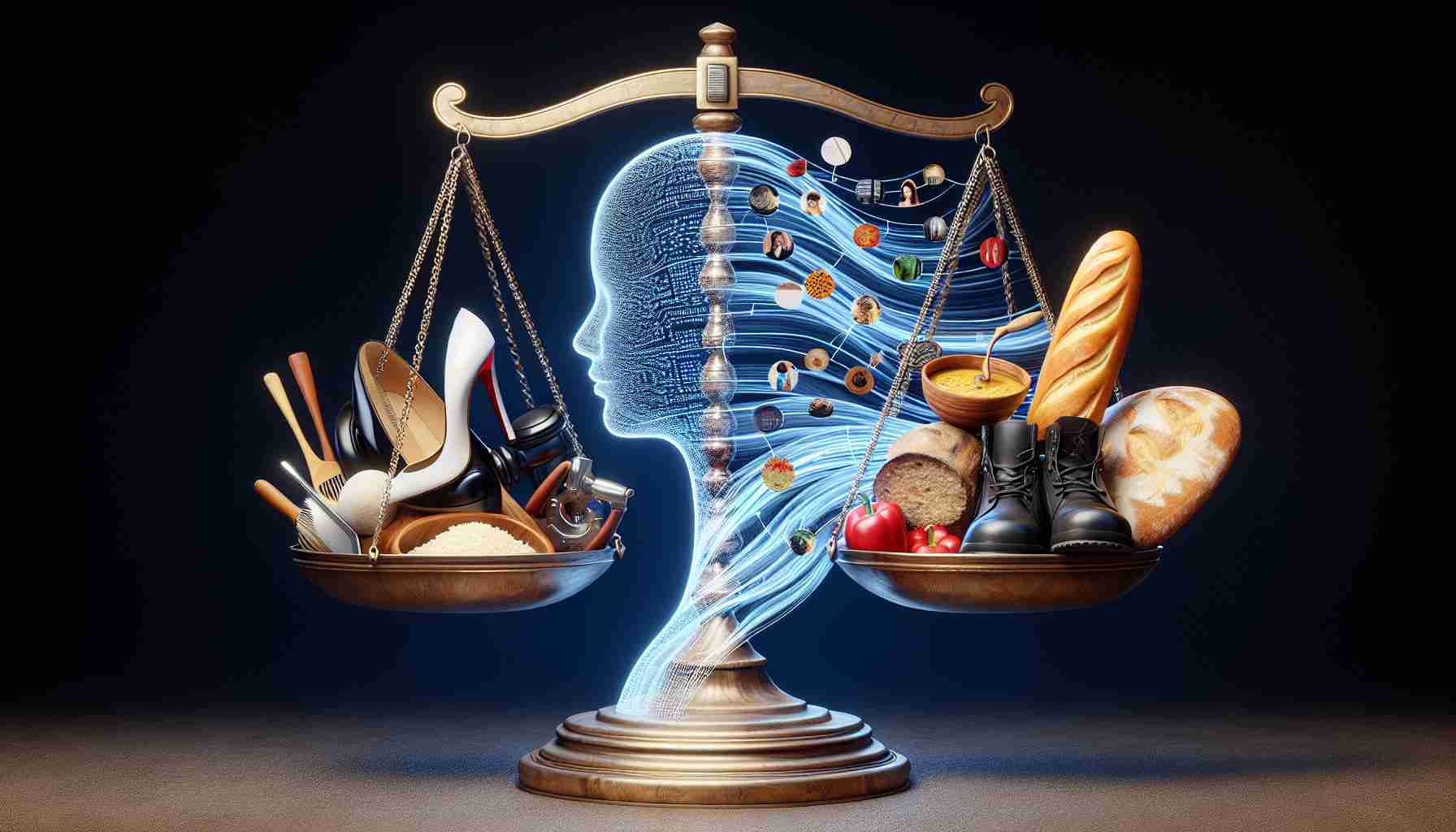

Artificial Intelligence (AI) has become an integral aspect of our daily lives, influencing everything from financial decision-making to our social media interactions. AI technology, such as the responses provided by chatbots or the capabilities seen in advanced systems like ChatGPT, often goes unnoticed. However, beneath the surface of its perceived neutrality lies a complex web of social implications, including various biases based on race, culture, and gender.

A recent report from the United Nations, released on March 7th, uncovered stereotypes embedded within natural language processing tools which are foundational to today’s popular generative AI platforms. The report highlighted that AI outputs often replicate gender biases. Investigators found discriminatory patterns when AI was asked to create stories about individuals, assigning men to a more prestigious array of roles such as professors, engineers, and doctors, while women were frequently relegated to stereotypical positions like cooks, housemaids, or even prostitutes.

Experts in the field emphasize that AI’s biases mirror those present in society—the data it learns from is inherently influenced by existing social inequalities. Mailén García, a sociologist and co-founder of the NGO Data Gender, accentuates that AI requires data, and often, that data is inherently sexist. This concept was the catalyst for Data Gender, an organization where García, along with Ivana Feldfeber and Yasmín Quiroga, analyzes data through a gendered lens, striving to address informational imbalances and underrepresentation.

One of their notable projects, Aymurai, harvests and makes available data on gender-based violence derived from judicial rulings. Their effort began within the Autonomous City of Buenos Aires’ legal system, with aspirations of extending this tool to various judicial departments. The ability of AI to anonymize sensitive information in court documents has been a particular focus, enabling more comprehensive studies on gender violence.

Experts advocate for diverse and reflective development teams for AI software, stressing that most designs historically stem from a homogeneous group—white males from the global north, often detached from personal experiences of discrimination. This lack of diversity can result in technologies that fail to account for gender perspectives and broader societal needs.

The conversation about AI extends beyond technological challenges to societal and ethical considerations, underscoring the importance of intentional regulation and intervention to ensure unbiased, equitable AI development and usage.

Key Question: What are the key challenges or controversies associated with AI reflecting gender and cultural stereotypes?

Key Challenges and Controversies:

– Data Bias: AI systems are often trained on historical data, which reflects past decisions and societal norms that may have been biased.

– Lack of Diversity in AI Development: AI development teams often lack gender and cultural diversity, leading to products that do not fully account for a wide spectrum of human experience.

– Algorithmic Transparency: AI systems can be complex and lack transparency, making it difficult to uncover and correct biases.

– Regulatory Frameworks: There is often a lack of clear, enforceable regulatory frameworks to guide the ethical development and use of AI.

Advantages and Disadvantages of Addressing AI Bias:

Advantages:

– Greater Fairness: Efforts to address biases in AI can lead to more equitable outcomes for all users.

– Broader Market Reach: Products that are fair and inclusive can access a wider market by resonating with diverse users.

– Innovation: A focus on eliminating bias can drive innovation and creative problem-solving in AI development.

Disadvantages:

– Increased Complexity: Addressing bias may add complexity to the AI development process, potentially increasing costs and time to market.

– Uncertain Outcomes: Determining what counts as unbiased can be complicated, and efforts to de-bias AI do not always lead to clear or expected outcomes.

– Continuously Evolving Standards: Standards for fairness and biases evolve over time, requiring ongoing effort and adaptability in AI systems.

For more information and developments on the subject of AI and bias, here are a couple of relevant links to major domains:

– United Nations: You can learn more about the UN’s research and initiatives on AI and biases.

– Data Gender: This organization focuses on gender data and could have relevant information related to the intersection of gender and AI.

It’s important to note, however, that direct links to specific subpages or reports have not been provided, as per the instruction to avoid using anything but the main domain URLs.

The source of the article is from the blog yanoticias.es