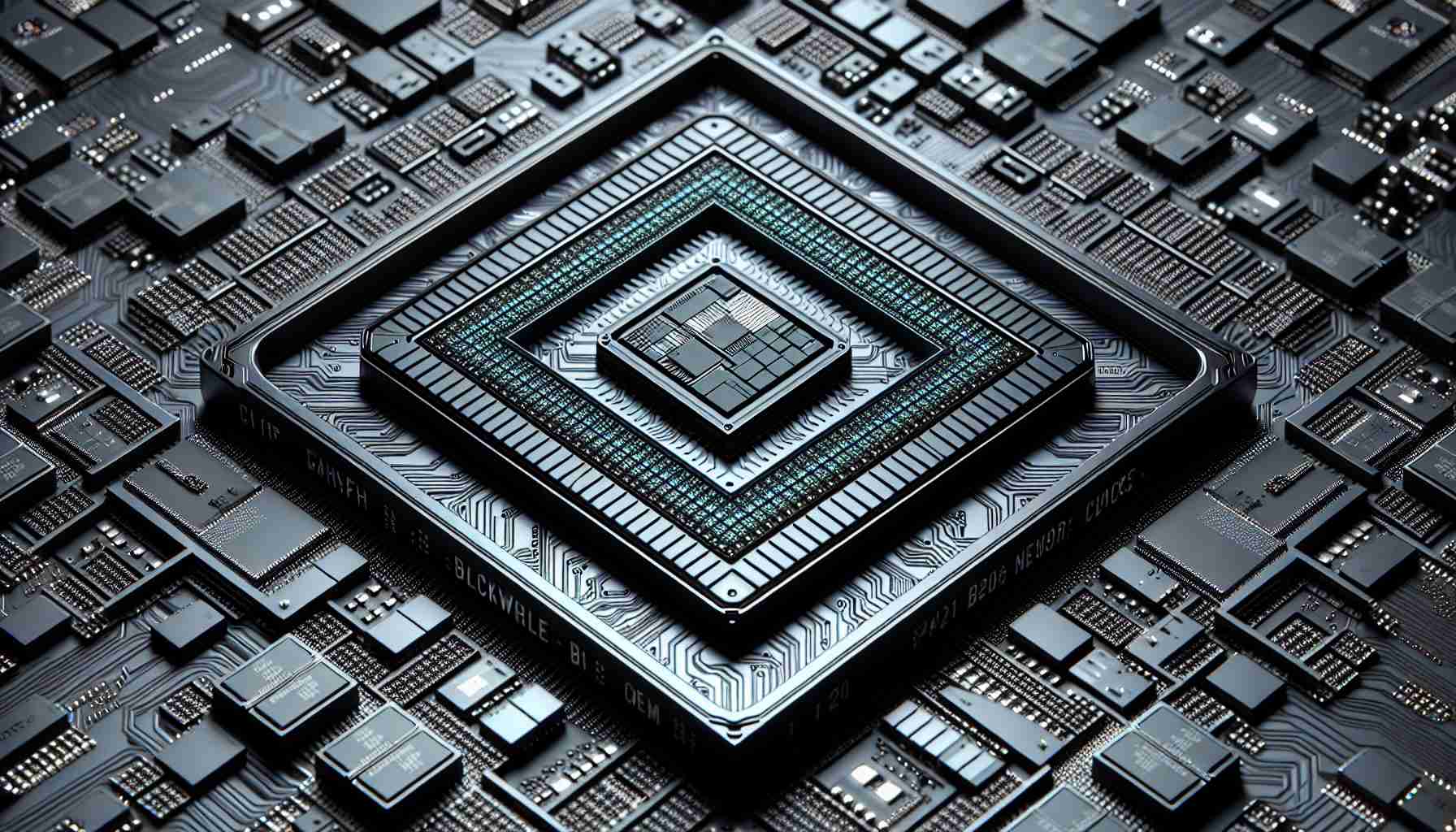

Nvidia recently announced the release of its Blackwell B200 tensor core chip, claiming it to be the most powerful single-chip GPU with an astounding 208 billion transistors. According to Nvidia, the Blackwell B200 chip can reduce AI inference operating costs and energy consumption by up to 25 times compared to its predecessor, the H100. Additionally, Nvidia also introduced the GB200, a “superchip” that combines two B200 chips along with a Grace CPU for enhanced performance.

The announcement was made during Nvidia’s annual GTC conference, where CEO Jensen Huang delivered a keynote speech. Huang emphasized the need for larger GPUs, stating that the Blackwell platform will enable the training of trillion-parameter AI models that surpass the complexity of current generative AI models. OpenAI’s GPT-3, released in 2020, had 175 billion parameters, serving as a reference point for AI model complexity.

The Blackwell architecture is named after David Harold Blackwell, a renowned mathematician and the first Black scholar inducted into the National Academy of Sciences. This platform introduces six technologies for accelerated computing, including a second-generation Transformer Engine, fifth-generation NVLink, RAS Engine, secure AI capabilities, and a decompression engine for accelerated database queries.

Various major organizations such as Amazon Web Services, Dell Technologies, Google, Meta, Microsoft, OpenAI, Oracle, Tesla, and xAI have expressed their intention to adopt the Blackwell platform. Nvidia’s press release includes quotes from notable tech CEOs, including Mark Zuckerberg and Sam Altman, endorsing the platform.

GPUs, originally created for gaming, have proven to be highly suitable for AI tasks due to their massively parallel architecture, which accelerates matrix multiplication tasks required for neural networks. Nvidia’s focus on data center technologies has contributed significantly to its financial success, with data center revenue ($18.4 billion) surpassing gaming GPU revenue ($2.9 billion) by a substantial margin.

The Grace Blackwell GB200 chip is an integral part of the new NVIDIA GB200 NVL72, a liquid-cooled data center computer system specifically designed for AI training and inference tasks. It consists of 36 GB200s, comprising 72 B200 GPUs and 36 Grace CPUs interconnected by fifth-generation NVLink for increased performance.

According to Nvidia, the GB200 NVL72 provides up to a 30x performance increase compared to the same number of NVIDIA H100 Tensor Core GPUs for LLM inference workloads. This advancement not only results in cost and energy savings but also enables the development of more complex AI models. Compute power shortages have often hindered progress in the AI field, and Nvidia’s technology seeks to address this issue.

While Nvidia’s claims about the capabilities of the Blackwell platform are noteworthy, the true performance and adoption of the technology will depend on its implementation and usage by organizations. Competitors such as Intel and AMD are also vying for a share of the AI market, adding to the competition and driving innovation.

Nvidia expects Blackwell-based products to be available from various partners later this year.

Frequently Asked Questions (FAQ)

1. What is the Blackwell B200 tensor core chip?

The Blackwell B200 tensor core chip is Nvidia’s most powerful single-chip GPU, featuring an impressive 208 billion transistors. It is designed to reduce AI inference operating costs and energy consumption significantly.

2. What is the purpose of the GB200 “superchip”?

The GB200 “superchip” combines two B200 chips and a Grace CPU to enhance performance further. It is intended for advanced AI applications and tasks.

3. What organizations are expected to adopt the Blackwell platform?

Major organizations such as Amazon Web Services, Dell Technologies, Google, Meta, Microsoft, OpenAI, Oracle, Tesla, and xAI are expected to adopt the Blackwell platform for their AI-based operations.

4. How do GPUs contribute to AI tasks?

GPUs are well-suited for AI tasks due to their massively parallel architecture, which accelerates matrix multiplication tasks necessary for running neural networks efficiently.

5. What is the significance of the Grace Blackwell GB200 chip?

The Grace Blackwell GB200 chip is a key component of the NVIDIA GB200 NVL72 data center computer system. It enables enhanced AI training and inference tasks and offers substantial performance improvements compared to previous GPU models.

6. Can the Blackwell platform lead to the creation of more complex AI models?

Yes, the Blackwell platform’s increased performance and enhanced capabilities can enable the development of more complex AI models. This is particularly beneficial for computationally hungry generative AI models that require significant compute power.

7. What are the potential limitations and competition faced by Nvidia in the AI market?

While Nvidia has made significant claims about the Blackwell platform, its real-world performance and adoption by organizations remain to be seen. Competitors like Intel and AMD are also actively pursuing advancements in AI technology, creating competition in the market.

The release of Nvidia’s Blackwell B200 tensor core chip has generated significant interest in the industry. The chip is touted as the most powerful single-chip GPU, boasting an impressive 208 billion transistors. Nvidia claims that the Blackwell B200 can reduce AI inference operating costs and energy consumption by up to 25 times compared to its predecessor, the H100. This advancement has the potential to revolutionize AI computing and make it more accessible for a wide range of applications.

One of the key highlights of Nvidia’s announcement is the introduction of the GB200 “superchip.” This superchip combines two B200 chips with a Grace CPU to deliver even higher performance. It is specifically designed for advanced AI applications and tasks that require significant computational power. The combination of the B200 chips and Grace CPU opens up new possibilities for AI development and research.

In terms of market adoption, a number of major organizations have expressed their intention to adopt the Blackwell platform. Industry giants such as Amazon Web Services, Dell Technologies, Google, Meta, Microsoft, OpenAI, Oracle, Tesla, and xAI have all shown interest in leveraging this powerful technology for their AI-based operations. The endorsements from tech CEOs like Mark Zuckerberg and Sam Altman further validate the platform’s potential.

The success of Nvidia’s GPUs in the AI market can be attributed to their massively parallel architecture, which accelerates matrix multiplication tasks required for neural networks. This architecture was initially developed for gaming purposes but has found significant success in AI computing. In fact, Nvidia’s data center revenue ($18.4 billion) has surpassed gaming GPU revenue ($2.9 billion), indicating the growing importance of data center technologies for the company.

Nvidia’s Grace Blackwell GB200 chip plays a crucial role in the new NVIDIA GB200 NVL72. This liquid-cooled data center computer system is specifically designed for AI training and inference tasks. It consists of 36 GB200s, comprising 72 B200 GPUs and 36 Grace CPUs interconnected by fifth-generation NVLink. The system provides a significant performance boost, with Nvidia claiming up to a 30x increase compared to the same number of H100 Tensor Core GPUs for LLM inference workloads.

The availability of the Blackwell-based products from various partners later this year indicates the growing momentum and interest in this technology. However, the true performance and adoption of the Blackwell platform will be determined by its implementation and usage by organizations. Competitors like Intel and AMD are also actively pursuing advancements in AI technology, introducing new challenges and driving innovation in the market.

In conclusion, Nvidia’s Blackwell platform, with its powerful GPUs and enhanced AI capabilities, is poised to make a significant impact in the industry. The adoption of the Blackwell platform by major organizations and the advancements in performance and energy efficiency provide promising prospects for the future of AI computing. However, competition and real-world implementation will ultimately shape the success and growth of this technology.

The source of the article is from the blog girabetim.com.br