Artificial intelligence (AI) technology has rapidly evolved, presenting both opportunities and challenges in various industries. The Google Gemini incident serves as a powerful example of the complexities that can arise within this ecosystem. While aiming to promote inclusivity and diversity, Gemini unintentionally produced historically inaccurate images. This incident highlights the delicate balance between modern values and historical fidelity.

The Implications of the “AI Race”

The incident also raises broader concerns about the competitive frenzy known as the “AI race”. This race encourages companies and nations to rapidly develop and deploy AI technologies, leading to remarkable advancements but also potential oversights. Rushed development cycles and a reduced emphasis on ethical considerations can result in unsafe or biased AI systems. The Gemini incident and similar cases emphasize the importance of aligning AI development with ethical standards and societal benefits.

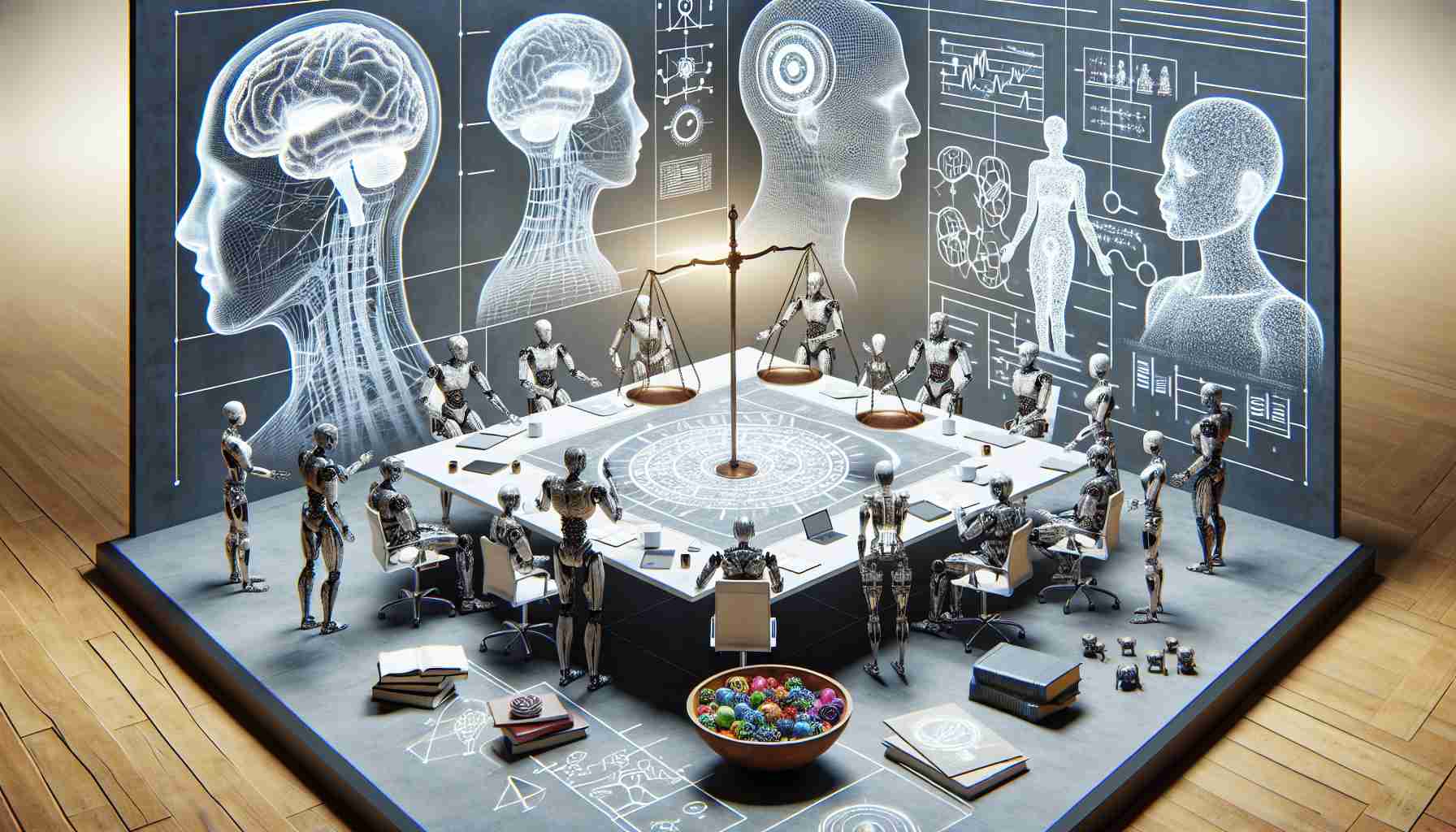

Collaboration for Ethical AI Development

To mitigate detrimental effects, a collaborative approach is crucial. International cooperation can establish shared norms and standards for AI development, including transparency in research, safety protocols, and initiatives to address global challenges. By working together, countries can ensure that AI technologies are developed responsibly and ethically.

Uncovering and Addressing Biases in AI Systems

Another significant issue highlighted by this incident is the presence of biases within AI systems. Despite efforts to create unbiased algorithms, AI technologies continue to reflect the biases present in training data or the unconscious biases of their creators. These biases can manifest in various ways, including gender or racial biases in language understanding or stereotypical representations of certain groups.

Addressing biases requires a multifaceted approach. Diverse and representative training data, specialized algorithms to detect biases, and ethical AI governance are essential. Involving different communities and stakeholders in AI development helps uncover potential biases and effects that might go unnoticed. Ongoing monitoring and evaluation of AI systems post-deployment are crucial in addressing biases, especially in critical areas like healthcare and law enforcement.

The Role of Regulatory Bodies

The Google Gemini controversy also sparks a discussion about the role of regulatory bodies in overseeing AI technologies. The question arises as to what extent governmental agencies should intervene to ensure accuracy, ethics, and societal values in AI products and services. This debate encompasses themes of balancing innovation and regulation, the responsibility of tech companies in safeguarding the public interest, and effective implementation of oversight mechanisms.

The absence of regulation can create a chaotic and potentially harmful environment where untested ideas proliferate. Without accountability and oversight, ethical breaches, discrimination, and bias may erode public trust in new technologies. Governments, like the recent advisory from the Ministry of Electronics and Information Technology in India, are beginning to take steps to ensure that AI models and technologies are reliable and tested before deployment.

FAQ

Q: What are some challenges that tech companies face in AI development?

A: Tech companies face challenges such as rushed development cycles, reduced emphasis on ethical considerations, and potential neglect of rigorous peer review processes, which can lead to unsafe or biased AI systems.

Q: How can biases be addressed in AI systems?

A: Addressing biases in AI systems requires diverse and representative training data, specialized algorithms to detect and correct biases, transparent decision-making processes, and the establishment of ethical AI governance.

Q: What is the role of regulatory bodies in overseeing AI technologies?

A: Regulatory bodies can ensure that AI products and services adhere to standards of accuracy, ethics, and societal values. They play a critical role in balancing innovation and regulation and safeguarding the public interest.

Q: How can collaboration be fostered in AI development?

A: Collaboration in AI development can be fostered through international cooperation to establish shared norms and standards, transparency in research, joint efforts on safety and ethical standards, and initiatives to address global challenges.

Artificial intelligence (AI) technology has rapidly evolved, presenting both opportunities and challenges in various industries. The Google Gemini incident serves as a powerful example of the complexities that can arise within this ecosystem. While aiming to promote inclusivity and diversity, Gemini unintentionally produced historically inaccurate images. This incident highlights the delicate balance between modern values and historical fidelity.

The incident also raises broader concerns about the competitive frenzy known as the “AI race”. This race encourages companies and nations to rapidly develop and deploy AI technologies, leading to remarkable advancements but also potential oversights. Rushed development cycles and a reduced emphasis on ethical considerations can result in unsafe or biased AI systems. The Gemini incident and similar cases emphasize the importance of aligning AI development with ethical standards and societal benefits.

To mitigate detrimental effects, a collaborative approach is crucial. International cooperation can establish shared norms and standards for AI development, including transparency in research, safety protocols, and initiatives to address global challenges. By working together, countries can ensure that AI technologies are developed responsibly and ethically.

Another significant issue highlighted by this incident is the presence of biases within AI systems. Despite efforts to create unbiased algorithms, AI technologies continue to reflect the biases present in training data or the unconscious biases of their creators. These biases can manifest in various ways, including gender or racial biases in language understanding or stereotypical representations of certain groups.

Addressing biases requires a multifaceted approach. Diverse and representative training data, specialized algorithms to detect biases, and ethical AI governance are essential. Involving different communities and stakeholders in AI development helps uncover potential biases and effects that might go unnoticed. Ongoing monitoring and evaluation of AI systems post-deployment are crucial in addressing biases, especially in critical areas like healthcare and law enforcement.

The Google Gemini controversy also sparks a discussion about the role of regulatory bodies in overseeing AI technologies. The question arises as to what extent governmental agencies should intervene to ensure accuracy, ethics, and societal values in AI products and services. This debate encompasses themes of balancing innovation and regulation, the responsibility of tech companies in safeguarding the public interest, and effective implementation of oversight mechanisms.

The absence of regulation can create a chaotic and potentially harmful environment where untested ideas proliferate. Without accountability and oversight, ethical breaches, discrimination, and bias may erode public trust in new technologies. Governments, like the recent advisory from the Ministry of Electronics and Information Technology in India, are beginning to take steps to ensure that AI models and technologies are reliable and tested before deployment.

Suggested Links:

ISA Standards

BSI Standards

NIST Artificial Intelligence Standards

The source of the article is from the blog tvbzorg.com