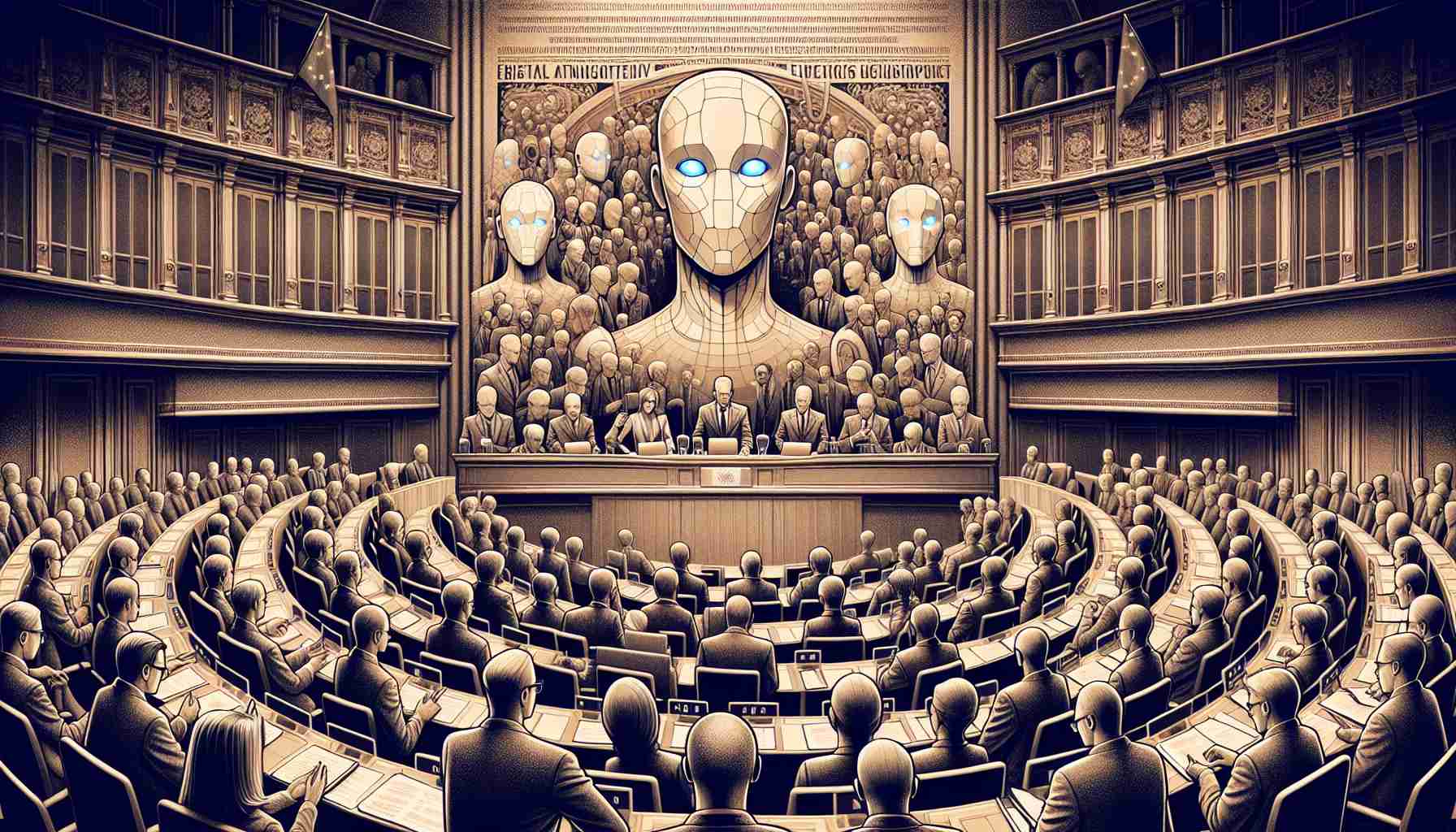

EU lawmakers are poised to enact comprehensive regulations governing the field of artificial intelligence, including systems like OpenAI’s ChatGPT. The proposed rules, first introduced in 2021, aim to strike a balance between safeguarding citizens from potential risks and promoting innovation.

The recent launch of OpenAI’s ChatGPT, supported by Microsoft, has sparked widespread interest in AI software development. However, concerns have also intensified regarding its potential misuse and negative implications. Recognizing the need for oversight, the EU’s “AI Act” takes a risk-based approach, imposing stricter requirements for higher-risk AI systems.

Under these regulations, providers of high-risk AI technologies must conduct thorough risk assessments and ensure their products comply with the law before making them available to the public. The EU’s internal market commissioner, Thierry Breton, emphasized that the approach taken by the regulations is proportionate and focused on minimizing undue regulation while ensuring responsible AI deployment.

The EU’s “AI Act” has achieved widespread acclaim, positioning Europe as a global leader in trustworthy AI. Dragos Tudorache and Brando Benifei, Members of the European Parliament, successfully championed the legislation’s passage, and Tudorache celebrated the EU’s timely action, stating, “The EU delivered. No ifs, no buts, no later.”

Violations of the regulations could result in substantial fines, ranging from 7.5 million to 35 million euros ($8.2 million to $38.2 million), depending on the nature of the infringement and the size of the company involved. The rules explicitly forbid the use of AI for predictive policing, as well as systems that leverage biometric information to infer sensitive personal attributes such as race, religion, or sexual orientation.

The regulations also restrict real-time facial recognition in public spaces, with exceptions granted to law enforcement agencies. However, even in these cases, police must seek approval from a judicial authority before deploying AI systems. This approach strikes a balance between public safety concerns and protecting individual privacy rights.

Following approval in parliament, the 27 member states of the EU are expected to formally endorse the regulations in April. The law will subsequently be published in the EU’s Official Journal in May or June. The provisions pertaining to AI models like ChatGPT will take effect 12 months after the law’s official publication, while most other rules will have a two-year compliance timeline.

The transformative potential of AI in various aspects of Europeans’ lives, coupled with the intense competition among major tech companies in the lucrative AI market, has led to extensive lobbying efforts. French AI startup Mistral AI, Germany’s Aleph Alpha, and US tech giants like Google and Microsoft have all sought to influence the regulations. The impact of corporate lobbying during these negotiations has raised concerns among watchdog groups, who stress the need for clarity and transparency regarding standards, thresholds, and other obligations.

Commissioner Breton has emphasized the EU’s commitment to resisting corporate pressure to exempt large AI models from regulations. He characterized the resulting “AI Act” as a balanced, risk-based, and future-proof framework. However, some tech lobbying groups, such as CCIA, have expressed reservations about certain provisions, claiming that they remain unclear and could impede the development and deployment of innovative AI applications in Europe.

To ensure the successful implementation of the regulations, it will be vital to strike a delicate balance between maintaining a thriving and dynamic AI market while imposing necessary safeguards. Only through comprehensive and diligent execution can the EU ensure that the rules neither burden companies nor hinder their ability to innovate and compete effectively.

[Source: (AFP) URL of the domain, not subpage]

FAQ

What is the “AI Act”?

The “AI Act” refers to the comprehensive set of regulations proposed by EU lawmakers to govern artificial intelligence, including systems like OpenAI’s ChatGPT. It aims to protect citizens from potential risks associated with AI while promoting innovation.

What are the key elements of the “AI Act”?

The “AI Act” takes a risk-based approach, imposing stricter requirements on higher-risk AI systems. High-risk AI providers must conduct risk assessments and ensure compliance with the law before making their products available to the public. The regulations also forbid the use of AI for predictive policing and systems that use biometric information to infer sensitive personal attributes.

What are the penalties for non-compliance?

Violations of the “AI Act” can result in fines ranging from 7.5 million to 35 million euros ($8.2 million to $38.2 million), depending on the nature of the infringement and the size of the company involved.

What are the key concerns raised by watchdog groups?

Watchdog groups have expressed concerns about potential weakening of the regulations due to corporate lobbying. They also highlight the need for further clarification on various aspects, such as standards, thresholds, and transparency obligations.

What is the timeline for implementation?

After approval in parliament, the 27 member states of the EU are expected to endorse the regulations formally in April. The law will be published in the EU’s Official Journal in May or June. The provisions specific to AI models like ChatGPT will take effect 12 months after the law’s official publication, while most other rules will have a two-year compliance timeline.

– Artificial Intelligence (AI): The theory and development of computer systems that can perform tasks that typically require human intelligence, such as speech recognition, problem-solving, and decision-making.

– AI Act: The comprehensive set of regulations proposed by EU lawmakers to govern artificial intelligence.

– OpenAI’s ChatGPT: An AI system developed by OpenAI in partnership with Microsoft that generates human-like text responses in chat-based interactions.

FAQ:

What is the “AI Act”?

The “AI Act” refers to the comprehensive set of regulations proposed by EU lawmakers to govern artificial intelligence, including systems like OpenAI’s ChatGPT. It aims to protect citizens from potential risks associated with AI while promoting innovation.

What are the key elements of the “AI Act”?

The “AI Act” takes a risk-based approach, imposing stricter requirements on higher-risk AI systems. High-risk AI providers must conduct risk assessments and ensure compliance with the law before making their products available to the public. The regulations also forbid the use of AI for predictive policing and systems that use biometric information to infer sensitive personal attributes.

What are the penalties for non-compliance?

Violations of the “AI Act” can result in fines ranging from 7.5 million to 35 million euros ($8.2 million to $38.2 million), depending on the nature of the infringement and the size of the company involved.

What are the key concerns raised by watchdog groups?

Watchdog groups have expressed concerns about potential weakening of the regulations due to corporate lobbying. They also highlight the need for further clarification on various aspects, such as standards, thresholds, and transparency obligations.

What is the timeline for implementation?

After approval in parliament, the 27 member states of the EU are expected to endorse the regulations formally in April. The law will be published in the EU’s Official Journal in May or June. The provisions specific to AI models like ChatGPT will take effect 12 months after the law’s official publication, while most other rules will have a two-year compliance timeline.

For more information, please visit the AFP website.

The source of the article is from the blog toumai.es